Since last night the internet has been all atwitter about a commentary* by Dan Gilbert and colleagues about the recent and (in my view) misnamed Reproducibility Project: Psychology. In this commentary, Gilbert et al. criticise the RPP for a number of technical reasons asserting that the sampling was non-random and biased and that essentially the conclusions, in particular in the coverage by science media and blogosphere, of a replicability crisis in psychology is unfounded. Some of their points are rather questionable to say the least and some, like their interpretation of confidence intervals, are statistically simply wrong. But I won’t talk about this here.

One point they raise is the oft repeated argument that replications differed in some way from the original research. We’ve discussed this already ad nauseam in the past and there is little point going over this again. Exact replication of the methods and conditions of an original experiment can test the replicability of a finding. Indirect replications loosely testing similar hypotheses instead inform about generalisability of the idea, which in turn tells us about the robustness of the purported processes we posited. Everybody (hopefully) knows this. Both are important aspects to scientific progress.

The main problem is that most debates about replicability go down that same road with people arguing about whether the replication was of sufficient quality to yield interpretable results. One example by Gilbert and co is that one of the replications in the RPP used the same video stimuli used by the original study, even though the original study was conducted in the US while the replication was carried out in the Netherlands, and the dependent variable was related to something that had no relevance to the participants in the replication (race relations and affirmative action). Other examples like this were brought up in previous debates about replication studies. A similar argument has also been made about the differences in language context between the original Bargh social priming studies and the replications. In my view, some of these points have merit and the example raised by Gilbert et al. is certain worth a facepalm or two. It does seem mind-boggling how anyone could have thought that it is valid to replicate a result about a US-specific issue in a liberal European country whilst using the original stimuli in English.

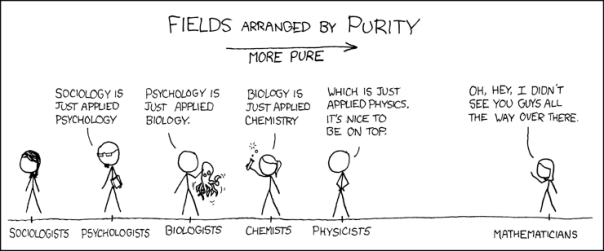

But what this example illustrates is a much larger problem. In my mind that is actually the crux of the matter: Psychology, or at least most forms of more traditional psychology, do not lend themselves very well to replication. As I am wont to point out, I am not a psychologist but a neuroscientist. I do work in a psychology department, however, and my field obviously has considerable overlap with traditional psychology. I also think many subfields of experimental psychology work in much the same way as other so-called “harder” sciences. This is not to say that neuroscience, psychophysics, or other fields do not also have problems with replicability, publication bias, and other concerns that plague science as a whole. We know they do. But the social sciences, the more lofty sides of psychology dealing with vague concepts of the mind and psyche, in my view have an additional problem: They lack the lawful regularity of effects that scientific discovery requires.

For example, we are currently conducting an fMRI experiment in which we replicate a previous finding. We are using the approach I have long advocated that in order to try to replicate you should design experiments that do both, replicate a previous result but also seek to address a novel question. The details of the experiment are not very important. (If we ever complete this experiment and publish it you can read about it then…) What matters is that we very closely replicate the methods of a study from 2012 and this study closely replicated the methods of one from 2008. The results are pretty consistent across all three instances of the experiment. The 2012 study provided a somewhat alternative interpretation of the findings of the 2008 one. Our experiment now adds more spatially sensitive methods to yet again paint a somewhat different picture. Since we’re not finished with it I can’t tell you how interesting this difference is. It is however already blatantly obvious that the general finding is the same. Had we analysed our experiment in the same way as the 2008 study, we would have reached the same conclusions they did.

The whole idea of science is to find regularities in our complex observations of the world, to uncover lawfulness in the chaos. The entire empirical approach is based on the idea that I can perform an experiment with particular parameters and repeat it with the same results, blurred somewhat by random chance. Estimating the generalisability allows me to understand how tweaking the parameters can affect the results and thus allows me to determine what the laws are the govern the whole system.

And this right there is where much of psychology has a big problem. I agree with Gilbert et al. that repeating a social effect in US participants with identical methods in Dutch participants is not a direct replication. But what would be? They discuss how the same experiment was then repeated in the US and found results weakly consistent with the original findings. But this isn’t a direct replication either. It does not suffer from the same cultural and language differences as the replication in the Netherlands did but it has other contextual discrepancies. Even repeating exactly the same experiment in the original Stanford(?) population would not necessarily be equivalent because of the time that has passed and the way cultural factors have changed. A replication is simply not possible.

For all the failings that all fields of science have, this is a problem my research area does not suffer from (and to clarify: “my field” is not all of cognitive neuroscience, much of which is essentially straight-up psychology with the brain tagged on, and also while I don’t see myself as a psychologist, I certainly acknowledge that my research also involves psychology). Our experiment is done on people living in London. The 2012 study was presumably done mainly on Belgians in Belgium. As far as I know the 2008 study was run in the mid-western US. We are asking a question that deals with a fairly fundamental aspect of human brain function. This does not mean that there aren’t any population differences but our prior for such things affecting the results in a very substantial way are pretty small. Similarly, the methods can certainly modulate the results somewhat but I would expect the effects to be fairly robust to minor methodological changes. In fact, whenever we see that small changes in the method (say, the stimulus duration or the particular scanning sequence used) seem to obliterate a result completely, my first instinct is usually that such a finding is non-robust and thus unlikely to be meaningful.

From where I’m standing, social and other forms of traditional psychology can’t say the same. Small contextual or methodological differences can quite likely skew the results because the mind is a damn complex thing. For that reason alone, we should expect psychology to have low replicability and the effect sizes should be pretty small (i.e. smaller than what is common in the literature) because they will always be diluted by a multitude of independent factors. Perhaps more than any other field, psychology can benefit from preregistering experimental protocols to delineate the exploratory garden-path from hypothesis-driven confirmatory results.

I agree that a direct replication of a contextually dependent effect in a different country and at a different time makes little sense but that is no excuse. If you just say that the effects are so context-specific it is difficult to replicate them, you are bound to end up chasing lots of phantoms. And that isn’t science – not even a “soft” one.